Update: I accidentally used newSingleThreadScheduledExecutor instead of newFixedThreadPool(1) for the "Dedicated" test case [ide code completion ..]. With this corrected, "Dedicated" outperforms even more. See follow up post for updated results + "perf" tool cache miss measurement results (do not really change the big picture).

The experiment in my last post had a

serious flaw: In an actor system, operations on a single actor are executed one after the other. However by naively adding message-processing jobs to executors, private actor state was accessed concurrently, leading to "false-sharing" and cache coherency related costs especially for small local state sizes.

Therefore I modified the test. For each Actor scheduled, the next message-processing is scheduled once the previous one finished, so the experiment resembles the behaviour of typical actors (or lightweight processes/tasks/fibers) correctly without concurrent access to a memory region.

Experiment roundup:

Several million messages are scheduled to several "Actor" simulating classes. Message processing is simulated by reading and writing the private, actor-local state in random order. There are more Actors (24-8000) than threads (6-8). Note that results established (if any) will also hold true for other light-weight concurrency schemes like go-routines, fibers, tasks ...

The test is done with

- ThreadPoolExecutor

- WorkStealingExecutor

- Dedicated Thread (Each Actor has a fixed assignment to a worker thread)

Simulating an Actor accessing local state:

Full Source of Benchmark

Suspection:

As ThreadPoolExecutor and WorkStealingExecutor schedule each message on a random Thread, they will produce more cache misses compared to pinning each actor onto a fixed thread. Speculation is, that work stealing cannot make up for the costs provoked by cache misses.

(Some) Variables:

- Number of worker threads

- Number of actors

- Amount of work per message

- Locality / Size of private unshared actor state

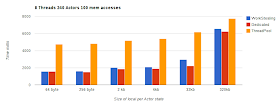

8 Threads 24 actors 100 memory accesses (per msg)

Interpretation:

For this particular load, fixed assigned threads outperform executors. Note: the larger the local state of an actor, the higher the probability of a prefetch fail => cache miss. In this scenario my suspection holds true: Work stealing cannot make up for the amount of cache misses. fixed assigned threads profit, because its likely, some state of a previously processed message resides still in cache once a new message is processed on an actor.

Its remarkable how bad ThreadpoolExecutor performs in this experiment.

This is a scenario typical for backend-type service: There are few actors with high load. When running a front end server with many clients, there are probably more actors, as typically there is one actor per client session. Therefor lets push up the number of actors to 8000:

8 Threads 8000 actors 100 memory accesses (per msg)

Interpretation:

With this amount of actors, all execution schemes suffer from cache misses, as the accumulated size of 8000 actors is too big to fit into L1 cache. Therefore the cache advantage of fixed-assigned threads ('Dedicated') does not make up for the lack of work stealing. Work Stealing Executor outperforms any other execution scheme if a large amount of state is involved.

This is a somewhat unrealistic scenario as in a real server application, client request probably do not arrive "round robin", but some clients are more active than others. So in practice I'd expect "Dedicated" will at least have some advantage of higher cache hits. Anyway: when serving many clients (stateful), WorkStealing could be expected to outperform.

Just to get a third variant: same test with 240 actors:

These results complete the picture: with fewer actors, cache effect supercede work stealing. The higher the number of actors, the higher the number of cache misses gets, so work stealing starts outperforming dedicated threads.

Modifying other variables

Number of memory accesses

If a message-processing does few memory accesses, work stealing improves compared to the other 2. Reason: fewer memory access means fewer cache misses means work stealing gets more significant in the overall result.

************** Worker Threads:8 actors:24 #mem accesses: 20

local state bytes: 64 WorkStealing avg:505

local state bytes: 64 ThreadPool avg:2001

local state bytes: 64 Dedicated avg:557

local state bytes: 256 WorkStealing avg:471

local state bytes: 256 ThreadPool avg:1996

local state bytes: 256 Dedicated avg:561

local state bytes: 2000 WorkStealing avg:589

local state bytes: 2000 ThreadPool avg:2109

local state bytes: 2000 Dedicated avg:600

local state bytes: 4000 WorkStealing avg:625

local state bytes: 4000 ThreadPool avg:2096

local state bytes: 4000 Dedicated avg:600

local state bytes: 32000 WorkStealing avg:687

local state bytes: 32000 ThreadPool avg:2328

local state bytes: 32000 Dedicated avg:640

local state bytes: 320000 WorkStealing avg:667

local state bytes: 320000 ThreadPool avg:3070

local state bytes: 320000 Dedicated avg:738

local state bytes: 3200000 WorkStealing avg:1341

local state bytes: 3200000 ThreadPool avg:3997

local state bytes: 3200000 Dedicated avg:1428

Fewer worker threads

Fewer worker threads (e.g. 6) increase probability of an actor message being scheduled to the "right" thread "by accident", so cache miss penalty is lower which lets work stealing perform better than "Dedicated" (the fewer threads used, the lower the cache advantage of fixed assigned "Dedicated" threads). Vice versa: if the number of cores involved increases, fixed thread assignment gets ahead.

Worker Threads:6 actors:18 #mem accesses: 100

local state bytes: 64 WorkStealing avg:2073

local state bytes: 64 ThreadPool avg:2498

local state bytes: 64 Dedicated avg:2045

local state bytes: 256 WorkStealing avg:1735

local state bytes: 256 ThreadPool avg:2272

local state bytes: 256 Dedicated avg:1815

local state bytes: 2000 WorkStealing avg:2052

local state bytes: 2000 ThreadPool avg:2412

local state bytes: 2000 Dedicated avg:2048

local state bytes: 4000 WorkStealing avg:2183

local state bytes: 4000 ThreadPool avg:2373

local state bytes: 4000 Dedicated avg:2130

local state bytes: 32000 WorkStealing avg:3501

local state bytes: 32000 ThreadPool avg:3204

local state bytes: 32000 Dedicated avg:2822

local state bytes: 320000 WorkStealing avg:3089

local state bytes: 320000 ThreadPool avg:2999

local state bytes: 320000 Dedicated avg:2543

local state bytes: 3200000 WorkStealing avg:6579

local state bytes: 3200000 ThreadPool avg:6047

local state bytes: 3200000 Dedicated avg:6907

Machine tested:

(real cores no HT)

$ lscpu

Architecture: x86_64

CPU op-mode(s): 32-bit, 64-bit

Byte Order: Little Endian

CPU(s): 12

On-line CPU(s) list: 0-11

Thread(s) per core: 1

Core(s) per socket: 6

Socket(s): 2

NUMA node(s): 2

Vendor ID: GenuineIntel

CPU family: 6

Model: 44

Stepping: 2

CPU MHz: 3067.058

BogoMIPS: 6133.20

Virtualization: VT-x

L1d cache: 32K

L1i cache: 32K

L2 cache: 256K

L3 cache: 12288K

NUMA node0 CPU(s): 1,3,5,7,9,11

NUMA node1 CPU(s): 0,2,4,6,8,10

Conclusion

- Performance of executors depends heavy on use case. There are work loads where cache locality dominates, giving an advantage of up to 30% over Work-Stealing Executor

- Performance of executors varies amongst different CPU types and models (L1 cache size + cost of a cache miss matter here)

- WorkStealing could be viewed as the better overall solution. Especially if a lot of L1 cache misses are to be expected anyway.

- The ideal executor would be WorkStealing with a soft actor-to-thread affinitiy. This would combine the strength of both execution schemes and would yield significant performance improvements for many workloads

- Vanilla thread pools without work stealing and actor-to-thread affinity perform significantly worse and should not be used to execute lightweight processes.

Source of Benchmark